PyNeRF#

Pyramidal Neural Radiance Fields

A fast NeRF anti-aliasing strategy.

Installation#

First, install Nerfstudio and its dependencies. Then install the PyNeRF extension and torch-scatter:

pip install git+https://github.com/hturki/pynerf

pip install torch-scatter -f https://data.pyg.org/whl/torch-${TORCH_VERSION}+${CUDA}.html

Running PyNeRF#

There are three default configurations provided which use the MipNeRF 360 and Multicam dataparsers by default. You can easily use other dataparsers via the ns-train command (ie: ns-train pynerf nerfstudio-data --data <your data dir> to use the Nerfstudio data parser)

The default configurations provided are:

Method |

Description |

Scene type |

Memory |

|---|---|---|---|

|

Tuned for outdoor scenes, uses proposal network |

outdoors |

~5GB |

|

Tuned for synthetic scenes, uses proposal network |

synthetic |

~5GB |

|

Tuned for Multiscale blender, uses occupancy grid |

synthetic |

~5GB |

The main differences between them is whether they are suited for synthetic/indoor or real-world unbounded scenes (in case case appearance embeddings and scene contraction are enabled), and whether sampling is done with a proposal network (usually better for real-world scenes) or an occupancy grid (usally better for single-object synthetic scenes like Blender).

Method#

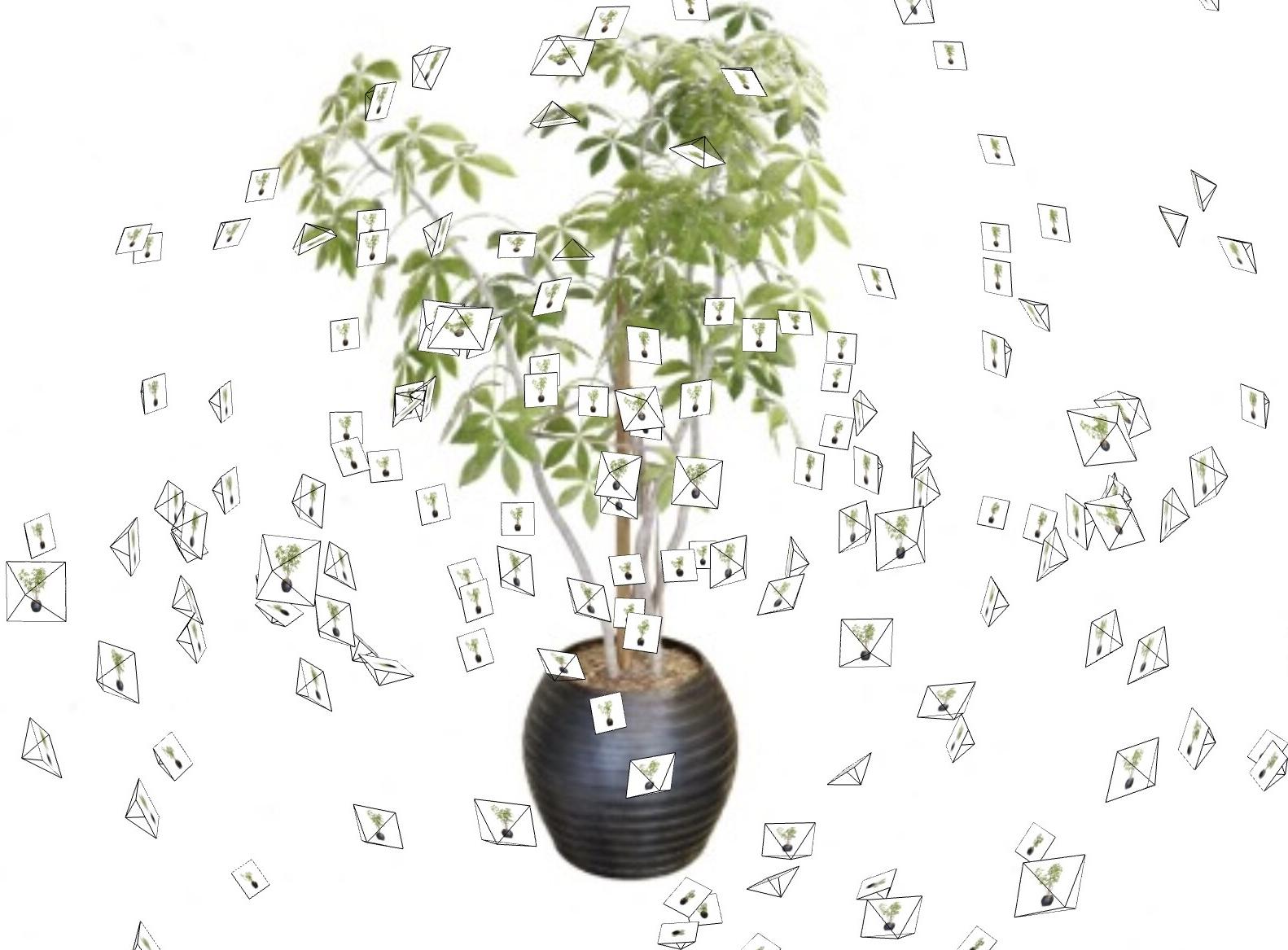

Most NeRF methods assume that training and test-time cameras capture scene content from a roughly constant distance:

|

|

They degrade and render blurry views in less constrained settings:

|

|

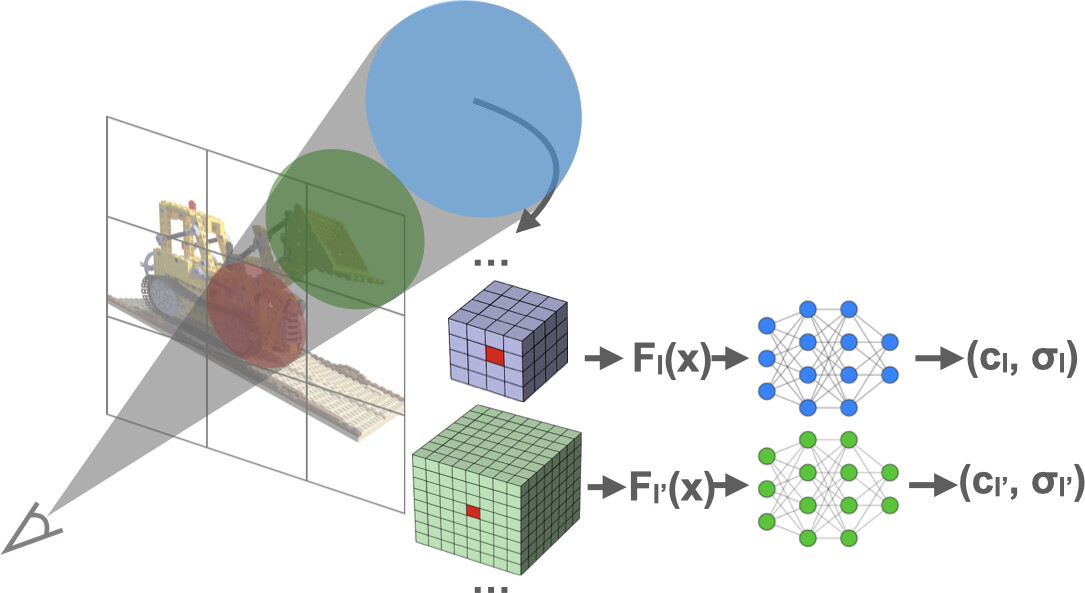

This is due to NeRF being scale-unaware, as it reasons about point samples instead of volumes. We address this by training a pyramid of NeRFs that divide the scene at different resolutions. We use “coarse” NeRFs for far-away samples, and finer NeRF for close-up samples: